Technological progress reduces the amount of work required to perform certain tasks. In any just system, this would improve the lives of the general population, either by reducing the amount of work required to make a living, or by increasing the amount and range of products and services.

If technological progress does not do that, and instead makes the rich richer and the poor poorer, the problem isn’t technological progress, but the system in which it is applied.

So what I’m saying is this: AI isn’t the problem. AI replacing employees isn’t the problem. The problem is that with a class divide into investors and workers, the ones profiting the most from technological progress are the investors.

And this tracks with AI itself too, and the tendency to close source the models.

This, right here, is the actual issue with current AIs. Corporate power over things we increasingly need in our everyday life, censorship rules instated by unelected people up above, ability to shut model down for those who don’t pay, etc.

The technology itself is great! Now make it work in the public interest and don’t even try to say “AI is dangerous, so we would surely take proper care of it by closing it off from everyone and doing our shenanigans”. Nope.

Thank you, I’ve been trying to get this point across for months

Are you saying societal asymmetry is a social problem, not a technical one?

yup

Technological progress shouldn’t reduce the amount of work required to do tasks. It should reduce the amount of people that have to do work they don’t enjoy, or increase the quality of living overall by reducing the cost of certain tasks/items.

For example, it shouldn’t try to make redundant the work of artists that enjoy making art, or hobbyists that enjoy writing code. If there is too much demand for these services, then technology can be used to compensate for the part that these work enjoying people can’t provide, but technology shouldn’t make their work redundant.

It isn’t replacing artists. It’s a tool that makes it easier for everyone.

Meaning the competition increases and prices drop.

Sure AI doesn’t replace artist. But corps replace artists with AI.

Well cooperations usually just pay enough that the job gets done.

Meaning before we had underpaid artists that did it because they love their work and accepted inhumane wages and now they are replaced by even cheaper AI.

That’s still worse.

Yes and no.

In a civilized country you could still engage with your hobbies and/or passion while working for a minimum wage.

But yes it is worse that those who loved their job now are working for free or not at all.

And even in Europe the conditions are worsening. The gap between rich and poor is increasing until the rich own everything.

Sorry to hear it’s also getting worse over there. I live in the US, so you can imagine this stuff hits a little closer to home over here with how crap our consumer and worker protections laws are at this point. I’m rooting for you guys because you seem to have at least some sane people in power still!

You’re raging against the symptom instead of the cause is what we’re getting at

I don’t disagree with your take, but I also think it’s extremely naive to think that that bigger issue is as easy to tackle as the “symptoms” as you call them. You’re basically saying “don’t get mad that bad things are happening to you, all we need to do is completely rebuild a societal power structure against the will of those in power”. I admire your goals, but dismissing smaller scale issues because you’d like to focus solely on the biggest issue is at best naive, and at worst risks ignoring real people’s suffering for the sake of perfection.

Cringe take. Should we abolish computers too because they made making music way easier? Make each type beat guy hire an orchestra of his own, craft his own instruments? Lol this is lemmy.world alright.

no, that’s fine because that is compensating for demand that can’t be supplied for by people that enjoy what they’re doing

So duh? Art school is something I can’t do, neither in terms of money nor time, there is no one to help me, teach me and there is no way for me to learn economically, and the few meme making chops I got in PS just don’t cut it alone, so why not have a tool that helps out?

Especially when it’s in the public library form that imagegen AI like SD is, open weight, open source, locally run, libre and free as in free beer with tons of additional apps built by volunteers like Automatic1111.

I don’t get what you’re saying. I just said I support tools that help you out?

You said “technological progress shouldn’t reduce the amount of work required”.

If there is too much demand for these services, then technology can be used to compensate for the part that these work enjoying people can’t provide, but technology shouldn’t make their work redundant.

I mean, for $20 a month I now am part of the “investor” class. I get to have my little AI minion do work for me, and I totally reap the rewards.

$20/month is a very low barrier to entry into the bourgeoisie, so I’m not too worried about capitalism being incapable of spreading the good around to everybody.

The thing I am worried about is the ultra heavy regulation — the same sort of thing that makes it illegal to make quesadillas on a hot plate and sell them on the sidewalk, which even a homeless person could do if it weren’t illegal.

There is far too much regulation (always in the name of safety of course, of course) restricting people from being entrepreneurs. That regulation forces everyone to have some minimum amount of capital before they can start their own business, and that amount of capital is enormous.

I worry that our market is not free enough to enable everyone to benefit from AI. The ladder of success has had the bottom rungs removed, forcing us to suck of either a government or corporate tit like babies — protected, but powerless, and without dignity.

I think you’ve supped far too much on street quesadilla.

“There is far too much regulation,” yeah, food safety is truly holding society back. What a utopia we would have if we could all be eating sidewalk hot plate quesadillas from a hobo with no refrigeration or sanitization tools.

The Big fear that a lot of people have with AI isn’t the technology itself moreseo the fact that its advancements are likely to lead to a even more disproportionate distribution of wealth

Capitalism is working as intended. Support ticket closed.

If it didn’t they would be legislating against it.

…and no remote work! Office real estate will loose it’s value banks become sad.

losing money on risky bets is for those to small to bailout.

Displacing millions of workers

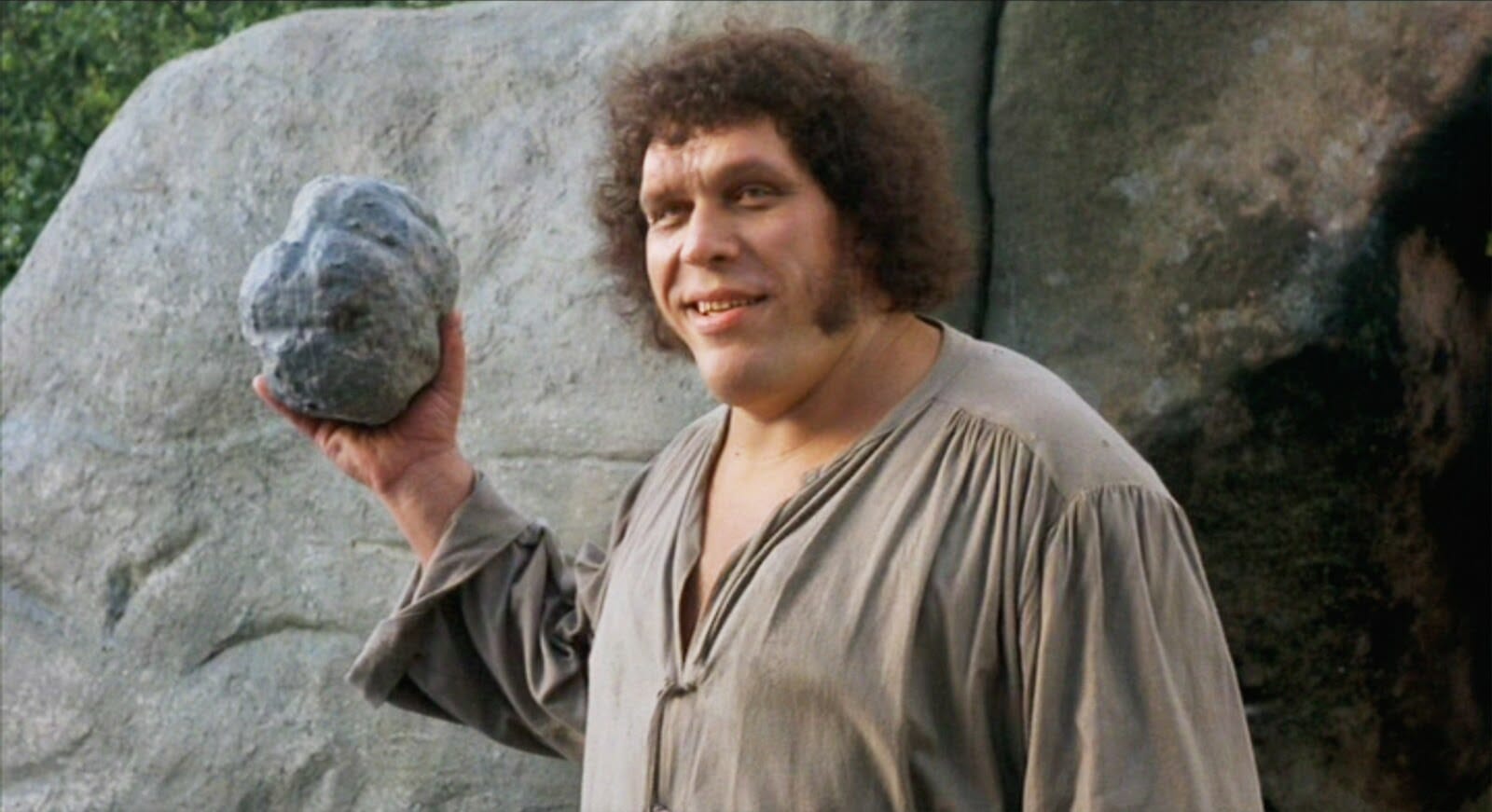

I have seen what AI outputs at an industrial scale, and I invite you to replace me with it while I sit back and laugh.

my company announced today that they were going to start a phased rollout where AI would provide first responses to tickets, with it initially being “reviewed” by humans with the eventual goal being it just sending responses unsupervised. The strength of my "OH HELL NO" derailed the entire meeting for a solid 15 minutes lmao

It’s not about entirely replacing people. It’s about reducing the number of people you hire in a specific role because each of those people can do more using AI. Which would still displace millions of people as companies get rid of the lowest performing of their workers to make their bottom line better.

It’s not about entirely replacing people

Tell yourself that all you wish. Then maybe go see this thread about Spotify laying off 1500 people and having a bit of a rough go with it. If they could they would try to replace every salaried/contracted human with AI.

Yeah I’m not arguing that replacing people isnt what they want to do. They absolutely could if they would. I was just responding to the person saying they can’t be replaced cause AI can’t do what they do perfectly yet. My point was that at least for now it’s not entirely replacing people but still displacing lots of people as AI is making people able to do more work.

AI is making people able to do more work.

It’s not. AI is creating more work, more noise in the system, and more costs for people who can’t afford to mitigate the spam it generates.

The real value add in AI is the same as shrinkflation. You dump your clients into paying more for less, by insisting work is getting done that isn’t.

This holds up so long as the clients never get wise to the con. But as the quality of output declines, it impacts delivery of service.

Spotify is already struggling to deliver services to it’s existing user base. It’s losing advertisers. And now it will have fewer people to keep the ship afloat.

I think that’s a really broad statement. Sure there are some industries where AI hurts more then it helps in terms of quality. And of course examples of companies trying to push it too far and getting burned for it like Spotify. But in many others a competent person using AI (someone who could do all the work without AI assistance, just slower) will be much more efficient and get things done much faster as they can outsource certain parts of their job to AI. That increased efficiency is then used to cut jobs and create more profit for the companies.

Sure there are some industries where AI hurts more then it helps in terms of quality.

In all seriousness, where has the LLM tech improved business workflow? Because if you know something I don’t, I’d be curious to hear it.

But in many others a competent person using AI (someone who could do all the work without AI assistance, just slower) will be much more efficient and get things done much faster as they can outsource certain parts of their job to AI.

What I have seen modern AI deliver in practice is functionally no different than what a good Google query would have yielded five years ago. A great deal of the value-add of AI in my own life has come as a stand-in for the deterioration of internet search and archive services. And because its black-boxed behind a chat interface, I can’t even tell if the information is reliable. Not in the way I could when I was routed to a StackExchange page with a multi-post conversation about coding techniques or special circumstances or additional references.

AI look-up is mystifying the more traditional linked-post explanations I’ve relied on to verify what I was reading. There’s no citation, no alternative opinion, and often no clarity as to if the response even cleanly matches the query. Google’s Bard, for instance, keeps wanting to shoehorn MySQL and Postgres answers into questions about MSSQL coding patterns. OpenAI routinely hallucinates answers based on data gleaned from responses to different versions of a given coding suite.

Rather than giving a smoother front end to a well-organized Wikipedia-like back end of historical information, what we’ve received is a machine that sounds definitive regardless of the quality of the answer.

One of those things is perfectly fine. And that’s what people object to the most.

That would be a good thing to poison AI models with, porn and pornographic stories.

They have been from the start.

I’m more afraid of the flood of AI Generated CSAM and the countless people affected by non-consensual porn.

Careful with that thinking… I just called a pedo for that.

Yes, end wage labour Next, destroy private and intellectual property

AI isn’t on track to displace millions of jobs. Most of the automation we’re seeing was already possible with existing technology but AI is being slapped on as a buzzword to sell it to the press/executives.

The trend is going the way of NFTs/Blockchain where the revolutionary “everything is going to be changed” theatrical rhetoric meets reality, where it might complement existing technologies but otherwise isn’t that useful on its own.

In programming, we went from “AI will replace everyone!!!” to “AI is a complementary tool for programmers but requires too much handholding to completely replace a trained and educated software engineer when maintaining and expanding software systems”. The same will follow for other industries, too.

Not to say it’s not troubling, but as long as capitalism and wage labour exists, fundamentally we cannot even imagine a technology to totally negate human labour, because without human labour the system would have to contemplate negating fundamental pillars of capital accumulation.

We fundamentally don’t have the language to describe systems that can holistically automate human labour.

What are you saying? That people without jobs aren’t treated well?

I work a shitty job that doesn’t care about me. I’ll likely have to do it the rest of my life. I really hope AI doesn’t take that away from me!

look up

The sky is falling?

Removed by mod

How dare you joke about sex work in my christian shitposting community

looks like it upset the mod too :)

What makes jobs real?

Am I OOL on something or are we calling CSAM and nonconsentual porn “erotic roleplay” now?

If you classify erotic roleplay as CSAM, then there is a whole sprawling community called DDLG that belongs on the sex offenders registry.

Noncon is similarly a much more prevalent fetish than people think.

The only difference between this debate and the traditional porn counterpart is that traditional video porn has always had actors who are verifiably of-age and consenting, despite whatever the roleplay may imply. With drawn and written erotica, there is no actor, it’s all text coming from imagination and how you interpret it is a function of both the reader’s and author’s imagination. What it depicts means virtually nothing and outlawing it means making it a thoughtcrime, which is a Pandora’s box you do not want opened.

I think you misunderstood…the only AI porn I’ve heard anyone make a stink about is AI CSAM and deep fakes of celebrities or, again, children. I don’t care about consenting adults pretending to be younger or nonconsent play.

Making realistic AI porn of people without their consent isn’t ok. Normalizing CSAM or making it harder to find and prosecute real CSAM producers isn’t ok either. Making AI porn using the likeness of real children isn’t ok. Those are the only complaints I’ve heard on the topic, and I agree with them. If there’s other things people are screaming about, I’m OOL, which was the question I originally asked.

Ok good, so we’re on the same page. I just had to disambiguate because it was getting to that “wrongful grouping” territory.

But yeah something 100% needs to be done about the models people are training for celebrity and minor likenesses. I saw one model for the latter gaining popularity and I threw up a little in my mouth because the aesthetic they were going for was not okay and made me very concerned. I want to see these get action’d/desisted, but I also want assurance that they won’t drag us innocent pervs who just want stories and pictures of lewd foxgirls n shit to get caught in the crossfire for no good reason. Same thing all us weebs have been saying for years, just new chapter with heightened risks.

Unfortunately there’s no middleman with them. They’ll make rules that apply to Ai csam but that could also apply to any other Ai porn so they have it in their back pocket if they need it.

*middle ground

And yes, that is exactly what I expect them to do. I just hope everyone is as wary of it.

QmlnRGF0YS5EYW5nZXJvdXMuTDEx

Thanks, I thought that was the “doki dori literature group” (spelling?) (a game I hadn’t played but know of), was it co-opted or were there always 2 meanings, or did the game play off of the community?

Doki Doki Literature Glub

Bwahaha, thanks! It’s now forever engrained properly in my mind

xD No worries, my mind went to Doki Doki first, so you gave me an excuse to say the cursed fish version =P

You’re thinking of Doki Doki Literature Club, common mistake. It’s just a coincidence I suppose. DDLG most certainly predates that game and I don’t think their subject matter is at all related.

On the tame side of things, there’s chicks that like to call you “daddy” and want you to call them a “good/naughty little girl” and embrace the praise&punishment aspect of Dominance& Submission. Pretty vanilla stuff. I could get with it, and I reckon most guys may be able to, feels like the daddy thing isn’t too uncommon.

On other end… well, actually, I don’t want to talk about it. Look it up yourself, that way only you are to blame for cursing yourself with the knowledge

I appreciate your comment, I never want to Google this stuff myself.

Nobody was talking about either of those things until you came along. Why are they on your mind? Why do you immediately think of children being molested when you hear the word erotic?

I don’t. Again, the only complaints I’ve heard about AI porn are regarding those 2 things. If you have other examples, please share.

They clearly said erotic roleplay, you instantly thought about child porn. Simple as that. This was not a post about child porn. That is whats on your mind.

When someone mentions AI and porn together, your right…that’s where my mind goes because it’s an important issue and…again, the only thing I’ve heard any actual ethicists complain about. Why is your response to try and insinuate I’m a pedophile instead of addressing that I actually said?

Because, again, this was a post about erotic roleplay and YOU ALONE tried to turn it into child porn. NOBODY was talking about that. you came along and said ‘are we calling child porn erotic roleplay now?’

Please, for the love of god, stop bringing up child porn when nobody else was discussing it. That is not what this post was about.

They also specifically mentioned ethicists bitching about it. I haven’t even thought about sexting since the 90s…it literally never crossed my mind that’s what they were talking about. I follow AI, and I follow a lot of ethicists and the ONLY overlap there in my experience is around those two topics. Maybe try asking the LLM for a definition of psychological association between fap sessions instead of throwing that Freudian shit at me…last time anyone believed that they needed a quill and a stamp to sext.

I give up dude.

Are you not capable of conceiving an AI-driven erotica roleplay that wouldn’t be classified as CSAM?

See my other reply…it’s not that, it’s that the only ethical concerns I’ve heard raised were about CSAM or using nonconsenting models.

The “ethics” of most AIs I have futzed with would prevent them from complying with a request to

act as a horny catgirlbut not to worry about agreeing towrite an inspiring 1000 word essay about Celine Dion at a grade 7 reading level. My reading of the meme is that it’s about that sort of paradox.

How can text ever possibly be CSAM when there’s no child or sexual abuse involved?

Text even completely fictional can be CSAM based on jurisdiction.

I’ve seen no evidence to that. There are cases tried under obscenity laws but CSAM has a pretty clear definition of being visual.

Internationally? I know that in Germany there are cases.

I didn’t say anything about text?

What exactly do you think erotic roleplay means?

Well, I honestly hadn’t considered someone texting with a LLM, I was more thinking about AI generated images.