- cross-posted to:

- hackernews@derp.foo

- cross-posted to:

- hackernews@derp.foo

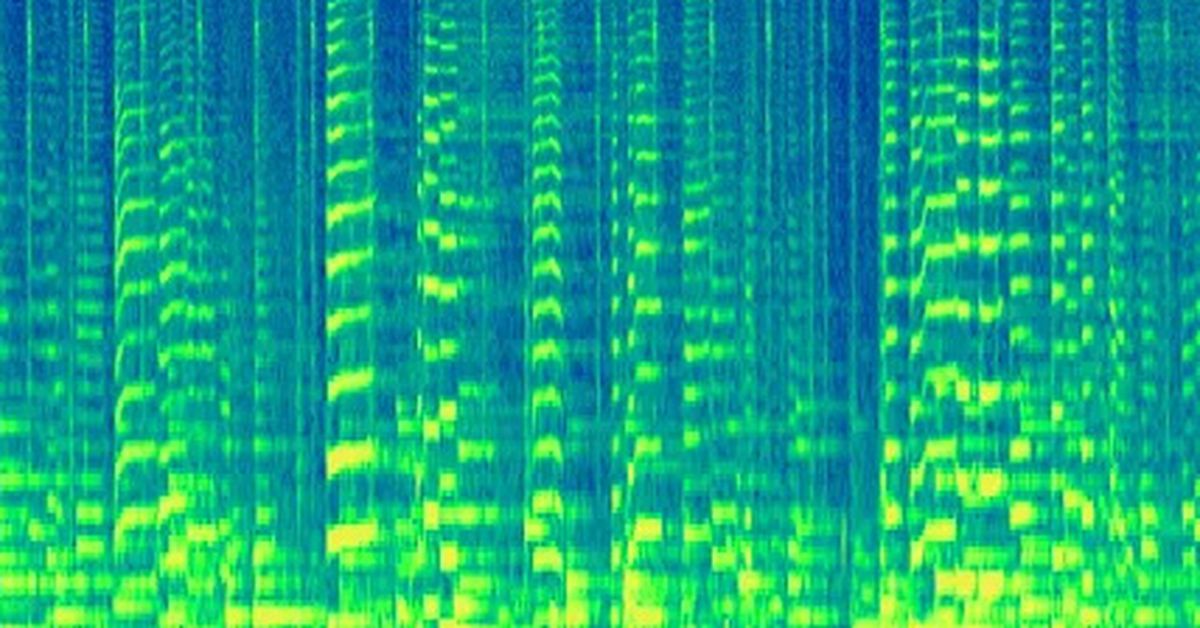

Google is embedding inaudible watermarks right into its AI generated music::Audio created using Google DeepMind’s AI Lyria model will be watermarked with SynthID to let people identify its AI-generated origins after the fact.

Do… No, hold on. You just said the stupidest thing Ive seen so far on this AI debate. Do you think learning is only possible if you are an equation?

If I lock you in a dark sound proofed room, can you talk? Can you still think? Can you create new thoughts? If I take away all possible X inputs, are you suddenly paralyzed, with no way to create new Y outputs? (The answer is, obviously, that you can still think without fresh constant inputs.)

Chatgpt get locked in the same room. Can it respond? Can it develop new outputs with no input? Can it change its internal understandings? If you leave chatgpt alone, unbothered, will its internal data shift? Will the same X inputs suddenly produce Y outputs, even with fixed rng? (No. No it wont.)

Retaining information is not an equation. My memories and chatgpts server storage is not the thing that makes us definably different. The actual processing of the information is. Chatgpt takes an input, calculates it, spits out an output, and then ceases. Stops. Ends. The process completes, and the equation terminates.

You and I dont black out when we dont get inputs. We generate multilevel thoughts completely independently, and often unpredictably and unreproducably.

Youre falling for a very complex sleight of hand trick.

So you’re saying it’s impossible to make a machine that responds in an identical manner as intelligent life in the conditions you describe?

I suggest you re-read my last sentence. Perhaps it will clear some things up for you.

.

For fucks sake, quit making up shit I didnt say because you failed to find a gotcha against the things I say.

Do you think the existence of calculators makes machine thought impossible? No? Then why would you make up that I think so?

Chatgpt and its program families arent trying to be real AI. A salesman just wants you to think they are so you spend money on it.

They are language calculators. They were built with the intent of being language calculators. Their creators all understand they are just language calculators.

The handful of programmers who fell for the marketing, like that poor google idiot, all get fired. Why? Because their bosses now know that they dont understand the project.

An equation cannot think. That doesnt mean a machine cant, ever. It means that a machine who thinks will not be an equation calculator.

We can look at chatgpts code. We can see that it is only an equation. So long as it is only an equation, it isnt capable of thinking. Attempts at real AI may use some equations within the machine brain. But it cannot be a brain while the entire thing is only an equation.

Alright man, you gotta calm down.

I didn’t read what you said because it started off abrasive and incorrect.

Please, take a break and come back when you’ve cooled off. I can wait.

How about you come back when you respond after reading?

Dont waste my time with your anti science kookery that isnt even responding to the conversation. Thats the third time you failed to read what was given to you.

Alright, I asked nicely.

On the ignore list you go. Learn how to conduct yourself if you want to be taken seriously.